Let The PromptFlow

In the rapidly evolving landscape of artificial intelligence, generative AI solutions are becoming increasingly central to a myriad of applications,...

Overview

Technology is always changing. As such, opportunities arise for companies to adopt new software solutions and technologies to provide better internal operations as well as to compete more effectively in their respective markets. One of the keys when moving to new and better technologies is a smooth data migration from an existing platform to a new one.

Myriads of firms have experienced frustrations and even failures migrating data from old systems to new. What follows is a short practical outline and set of straightforward recommendations to help make your data migration project a success.

First, it is important to note that a data source is not just a computerized repository of data. In most cases, it is an intelligently designed repository put together and managed by IT people, who are experts at creating and managing a data repository, working closely with their business counterparts who best know how the business intends to use that data. It is those same two groups of people who are the knowledge experts with regard to how and why the data was stored and related the way it was.

Define/Adopt a practical process:

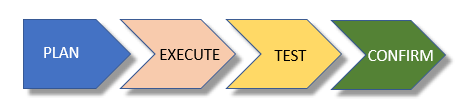

The following sections describe a simple disciplined process that can be expanded, as needed, to address increasingly complex data migration projects.

Recommendation 1- PLAN

The first recommendation is to talk to the IT and business experts, ask plenty of questions, take copious notes, and make sure to play it back to them to validate that you heard what they have said.

Here are some do's regarding engaging the IT & Business SMEs:

Identify/find the recognized business data owners

Interview the Business SMEs – Here are some questions to ask:

Identify/find the recognized IT data owners

Inventory the IT Data SMEs – Here are some questions to ask:

Map the Data from old system to new

Data mapping is the process of matching fields from one database to another. The goal is to move the data to the new location and ensure that it is accurate and usable. Failure in this step likely means that data may become corrupted, lost, or misapplied as it moves to its destination.

Create a mapping workbook

Here are the high-level steps to creating a data map:

NOTE: A data map is a living document that will likely require updates over time. There may be:

Recommendation 2 - EXECUTE

If a data migration project is small enough, you could adopt a DIY approach creating migration scripts to ferry data from source to target. Unfortunately, these approaches do not scale well and are typically more difficult to debug when things go wrong.

Use a Tool

Professional data migration tools, both on-prem and cloud based, provide a level of reliability, visual work environment, debugging, logging, performance and scalability (cloud based may scale dynamically while on-prem solutions are restricted by the hardware they are run on).

Regardless of the approach taken there are some practical things to do.

A recent data migration project called for CSV table exports from a legacy database to be migrated to cloud-based SharePoint lists. The key to starting with a CSV export is to make sure the export is complete with regards to the number of records and fields within the records. Careful counts of records, fields, and row widths is important to ensure your migration project is on solid footing from the start.

Here are some practical ways to Interrogate/test the CSV export:

Note: Often times your data migration efforts expose problems with the source data that the business and IT users are unaware of.

Create an issue log

Run a test migration

Recommendation 3 - TEST

Test the source to target migration

For one of our recent projects (Legacy source data migrated to SharePoint lists), we created a set of Excel-based PowerQuery Reports pulling data from the SharePoint lists and compared that data side-by-side with the CSV file exports from the Legacy DB tables

Here are some of the many tests we performed against the source and target datasets:

Continue to use the Issue Log

Recommendation 4 – CONFIRM

Share your data comparison workbook with the IT and Business SMEs

Conclusion

Given your success migrating a small subset of data, testing the migration yourself and having that migration validated by the IT and business teams, you are now ready to iterate through the remaining table migrations repeating steps 2, 3, & 4

As you can see, "Recommendation 1 – Plan" is by far the most involved step in your data migration journey. In that step, you follow a structured and disciplined process to learn about the source data and system with an eye toward migrating that data in a quality fashion into the new repository having developed a solid understanding of the data and how it is used.

Recommendation 2 – Execute begins with ensuring that the source data is complete. That all the data intended to be migrated has been identified and made available. This step marries the source data with your data learnings and translates/migrates those learnings into a physical "Target" data repository.

Recommendation 3 – Test applies the proof steps carefully comparing source to target, ensuring the data made the journey intact and more importantly made it to the destination in a way that the business can have full confidence in the result. In this step you prove to yourself that you got it right by creating the "Data Comparison Workbook" comparing source to target employing a variety of tests.

Recommendation 4 – Confirm invites the IT and the Business SMEs to share the data migration assets that you have created so they can confirm what you have already proven to yourself. In this last step, transparency is the approach that best convinces the customer that you have been a good steward of their data -carefully migrating their precious data assets from legacy source to a new more functionally rich platform.

We hope this helps- good luck on your journey! If you have any additional questions or need help with a migration, contact us!

In the rapidly evolving landscape of artificial intelligence, generative AI solutions are becoming increasingly central to a myriad of applications,...

Technology migrations can be tricky to navigate and execute. There are so many moving pieces to a migration, and you want to ensure that you are...

If you are like many organizations, you have rolled out Teams to your enterprise and introduced your users to using Teams for chat, collaboration on...