What is Microsoft Fabric?

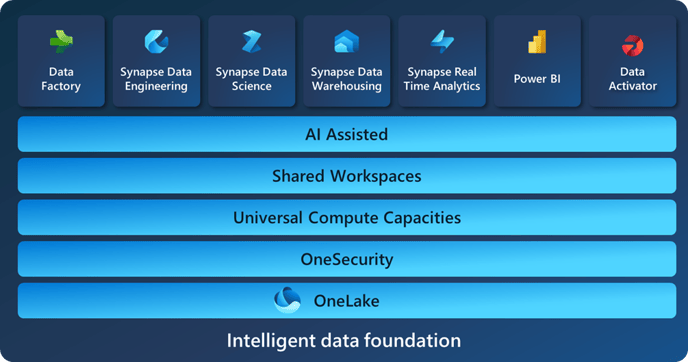

Microsoft Fabric is an end-to-end analytics solution with full-service capabilities including data movement, data lakes, data engineering, data integration, data science, real-time analytics, and business intelligence. Fabric combines the best of Microsoft Power BI, Azure Synapse Analytics, and Azure Data Factory to create a single, unified software as a service (SaaS) platform with seven core workloads, each purpose-built for specific personas and specific tasks.

Microsoft Fabric has caught the imagination of our customers, partners, and community members. Since the platform announcement, 25,000 organizations around the world are using Fabric today, including 67% of the Fortune 500 (according to this blog post). If that’s true, then getting data into Fabric must be well understood for everyone, RIGHT?

Well, there’s always a BUT- with all the options to get data into Fabric, do we really understand the how and why to use each?! Here’s your Spyglass guide to now and the known future for “Getting Data into Microsoft Fabric."

Options:

Each of the following capabilities provide a means to loading data into Fabric so that you can build and innovate with Data and AI. The guide below is meant to give a perspective and guidance based on Spyglass expertise. Opinions may vary, but we like to think ours are as reputable as any since they are based on real-world expertise.

Fabric Pipelines, Data flows & Notebooks

Notebooks are a code centric development item built on Apache Spark in Microsoft Fabric. Data engineers write code for data ingestion, data preparation, and data transformation. Data scientists also use notebooks to build machine learning solutions, including creating experiments and models, model tracking, and deployment.

Data pipelines enable powerful workflow capabilities at cloud-scale. With data pipelines, you can build complex workflows that can refresh your dataflow, move PB-size data, and define sophisticated control flow pipelines. Use data pipelines to build complex ETL and data factory workflows that can perform many different tasks at scale. Control flow capabilities are built into data pipelines that allow you to build workflow logic, which provides loops and conditionals.

Dataflows provide a low-code interface for ingesting data from hundreds of data sources, transforming your data using 300+ data transformations. Dataflows are built using the familiar Power Query experience that's available today across several Microsoft products.

MS Guidance: Fabric decision guide - copy activity, dataflow, or Spark - Microsoft Fabric | Microsoft Learn

Microsoft guidance is driven towards skillsets and personas, of which there’s so much overlap in the capabilities, ease of use and other criteria in their decision guide it doesn’t always provide the clarity most clients want or need right now. So, here’s our input to that guide:

Choose a standard! When we engage in a data project, we have all these options as well, but in the end, standardizing on an approach is more beneficial than the flexibility, especially if they can ALL do the same job.

-

Strive to standardize on data pipelines (copy activity) for sourcing data into Fabric. They scale, they are enterprise ready as they’ve been a capability in Azure Data Factory for years, and they’re highly flexible with minimal code required. This has been the recommended method to get data into any platform (Synapse, SQL, Databricks, ADLS, etc.) for a long time.

-

Use Dataflows and Notebooks for data transformation and try to stay away for data ingestion. Within Fabric, we really don’t prescribe when to use which tool for data transformation. This is a pure skillset and preference based on the client and persona. Although we like notebooks! The key is, we don’t want to use these much for getting data into Fabric, we’d much prefer to use copy activities.

Fabric Shortcuts & Mirroring

Shortcuts are objects in OneLake that point to other storage locations such as Dataverse (Dynamics & Power Platform), Azure Data Lake, AWS S3 and other OneLake locations. Data is not copied but ‘virtually’ accessible within Fabric.

Mirroring provides a modern way of accessing and ingesting data continuously and seamlessly from any database or data warehouse into the Data Warehousing experience in Microsoft Fabric. This is all in near real-time thus giving users immediate access to changes in the source! There is no complex setup or ETL for data replication. With the same connection details, data is replicated in a reliable way in real-time.

Use these options when:

-

You have external investments that precede Fabric, and your data strategy is to take more advantage of Fabric immediately as opposed to ‘migrating into Fabric’.

-

You have application databases in a supported mirroring database technology and are using Fabric for analytics.

-

You have solutions built in other (AWS currently) clouds and want to integrate into Fabric for analytics. When supported, strive to use this as a standard over copy activities.

-

When your data strategy centralizes with another platform, such as Snowflake for core Data warehousing, but you want to expend into Fabric for decentralization of analytics.

When supported, strive to use this as a standard over copy activities.

Azure Databricks, Synapse & Data Factory

Azure Databricks, Synapse and Data Factory (ADF) are generally recognizable as the enterprise ready data platforms of choice in Azure. They provide overlapping capabilities with Fabric including Apache Spark compute, lakehouse and warehouse architectures and the ability for data ingestion, data preparation, and data transformation. Likewise, they support direct integration with Fabric today with integration to OneLake and expanded support later with concepts like ‘mount or upgrade a Data Factory into Fabric’.

Use this option when,

-

Fabric Copy activities have no network connectivity to the source systems and Mirroring or Shortcuts are not supported. Currently, the roadmap for private network integration of Fabric to network protected source systems such as on-prem SQL instance, is slated for mid-2024. Until then, we’d rather standardize on using ADF to load data into Fabric than a standard with Fabric Data Flows. With a plan to mount or upgrade when those roadmap features are released.

-

You have external investments that precede Fabric, and your data strategy is to take more advantage of Fabric immediately as opposed to ‘migrating into Fabric’.

-

When your data strategy centralizes with another platform, such as Databricks or Synapse, but you want to expand into Fabric for decentralization of analytics use-cases.

Conclusion

Fabric and Azure provide a myriad of ways to consume and innovate with data. If you’re looking to expand your data estate for use with Microsoft Fabric or go all-in, then the first step is getting data INTO Microsoft Fabric. Understanding when to use each technology requires strategy and planning as it needs to account for your existing data capabilities and use-cases within Fabric. While the guide here can provide the input to your journey, it’s not a one size fits all approach. If you need help planning your journey to Fabric, Spyglass can help. Contact us today!

.png)